爬虫Part2——数据解析与提取 [TOC]

数据解析概述 当需要只是需要部分网页的内容而不是全部时,就要用到数据提取:

Re解析

Bs4解析

Xpath解析

正则表达式 语法 正则表达式(Regular Expression)是⼀种使用表达式的方式对字符串进行匹配的语法规则。

在线测试正则表达式:https://tool.oschina.net/regex/

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 元字符: 具有固定含义的特殊符号 . 匹配除换⾏符以外的任意字符 \w 匹配字⺟或数字或下划线 \s 匹配任意的空⽩符 \d 匹配数字 \n 匹配⼀个换⾏符 \t 匹配⼀个制表符 ^ 匹配字符串的开始 $ 匹配字符串的结尾 \W 匹配⾮字⺟或数字或下划线 \D 匹配⾮数字 \S 匹配⾮空⽩符 a|b 匹配字符a或字符b () 匹配括号内的表达式,也表示⼀个组 [...] 匹配字符组中的字符 # 是否属于[a-zA-Z0-9] [^...] 匹配除了字符组中字符的所有字符 # 这里^表示非 量词: 控制前⾯的元字符出现的次数 * 重复零次或更多次(尽可能多地去匹配) + 重复⼀次或更多次 ? 重复零次或⼀次 {n} 重复n次 {n,} 重复n次或更多次 {n,m} 重复n到m次 匹配:贪婪匹配和惰性匹配 .* 贪婪匹配 # 尽可能长的匹配 .*? 惰性匹配 # 回溯到最短的一次匹配

举例 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 str: 玩⼉吃鸡游戏, 晚上⼀起上游戏, ⼲嘛呢? 打游戏啊 reg: 玩⼉.*?游戏 结果: 玩⼉吃鸡游戏 reg: 玩⼉.*游戏 结果: 玩⼉吃鸡游戏, 晚上⼀起上游戏, ⼲嘛呢? 打游戏 str: <div>胡辣汤</div> reg: <.*> 结果: <div>胡辣汤</div> str: <div>胡辣汤</div> reg: <.*?> 结果: <div> </div> str: <div>胡辣汤</div><span>饭团</span> reg: <div>.*?</div> 结果: <div>胡辣汤</div>

Re模块(Regular Expression) 基本使用 findall() 1 2 3 4 5 lst = re.findall(r"\d+" , "5点之前. 你要给我5000万" ) print(lst)

字符串前加r防止转义,表示原生字符串(rawstring)。

不使用r,则匹配时候需要4个反斜杠:正则需要转化一次,python解释器需要转化。

finditer() 1 2 3 4 5 6 7 8 9 10 it = re.finditer(r"\d+" , "5点之前. 你要给我5000万" ) for i in it: print(i) print(i.group())

要使用.group()提取match对象的value

search() 1 2 3 4 5 s = re.search(r"\d+" , "5点之前. 你要给我5000万" ) print(s.group())

match() 1 2 3 4 5 m = re.match(r"\d+" , "5点之前. 你要给我5000万" ) print(m.group())

如果字符串为"在5点之前. 你要给我5000万"则匹配失败

相当于自带^

compile() 1 2 3 4 5 6 7 8 9 10 rule = re.compile (r"\d+" ) lst = rule.findall("5点之前. 你要给我5000万" ) print(lst) m = rule.match("5点之前. 你要给我5000万" ) print(m.group())

group() 1 2 3 4 5 6 7 8 9 10 11 12 13 14 s = """ <div class='⻄游记'><span id='1'>中国联通</span></div> <div class='⻄'><span id='2'>中国</span></div> <div class='游'><span id='3'>联通</span></div> <div class='记'><span id='4'>中通</span></div> <div class='⻄记'><span id='5'>国联</span></div> """ obj = re.compile (r"<div class='(?P<class>.*?'><span id)='(?P<id>\d+)'>(?P<val>.*?)</span></div>" , re.S) result = obj.search(s) print(result.group()) print(result.group("id" )) print(result.group("val" )) print(result.group("class" ))

分组:使用(?P<变量名>正则表达式)进一步提取内容

实战案例 豆瓣top250电影排行

首先要确认目标数据位置(源码or抓包)

抓不到的时候首先看User-Agent

次数太频繁的时候记得Keep-Alive

正则表达式写的越详细越容易匹配

①用\n\s*去匹配换行和空格;②用.strip()去除空格

match.groupdict()转换成字典newline=""不使用自动换行符

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 import csvimport reimport requestsrule = re.compile (r'<li>.*?<span class="title">(?P<name>.*?)</span>.*?' r'<br>\n\s*(?P<year>\d+) / .*?' r'property="v:average">(?P<score>.*?)</span>.*?' r'<span>(?P<person>.*?)人评价</span>' , re.S) f = open ("data.csv" , mode="w" , encoding="utf-8" , newline="" ) csv_writer = csv.writer(f) url = "https://movie.douban.com/top250" headers = { "user-agent" : "user-agent" , 'Keep-Alive' : 'timeout=15' } for p in range (0 , 250 , 25 ): params = { 'start' : p } resp = requests.get(url=url, headers=headers, params=params) result = rule.finditer(resp.text) for i in result: dic = i.groupdict() print(dic) csv_writer.writerow(dic.values()) f.close()

电影天堂板块信息

html中标签<a href='url'>xxx</a>表示超链接

当编码不一致时,根据网页源代码的标注信息charset=gb2312进行修正: resp.encoding = "gb2312"

verify=False去除安全认证拼接域名时要注意/数量

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 import reimport requestsdomain = "https://www.dytt89.com/" resp = requests.get(domain, verify=False ) resp.encoding = "gb2312" rule1 = re.compile (r"2021必看热片.*?<ul>(?P<ul>.*?)</ul>" , re.S) result1 = rule1.search(resp.text) ul = result1.group("ul" ).strip() rule2 = re.compile (r"href='(?P<href>.*?)'" , re.S) result2 = rule2.finditer(ul) child_href_list = [] for h in result2: child_href = domain + h.group("href" ).strip("/" ) child_href_list.append(child_href) rule3 = re.compile (r'<meta name=keywords content="(?P<name>.*?)下载">.*?' r'<td style="WORD-WRAP: break-word" bgcolor="#fdfddf"><a href="(?P<download>.*?)">' , re.S) download_list = {} for c in child_href_list: child_resp = requests.get(c, verify=False ) child_resp.encoding = "gb2312" result3 = rule3.search(child_resp.text) download_list[result3.group("name" )] = result3.group("download" ) for d in download_list.items(): print(d)

Bs4模块(Beautiful Soup) html语法规则 HTML(Hyper Text Markup Language)超文本标记语言,是我们编写网页的最基本也是最核心的⼀种语⾔,其语法规则就是用不同的标签对网页上的内容进行标记,从⽽使网页显示出不同的展示效果。

1 2 3 4 5 6 7 8 9 10 11 <标签 属性="值" 属性="值"> 被标记的内容 </标签> <标签 属性="值" 属性="值"/>

基本使用 通过网页源码建立BeautifulSoup对象,来检索页面源代码中的html标签。

find(标签, 属性=值) 只找一个find_all(标签, 属性=值) 找出所有

实战案例 新发地菜价 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 import csvimport requestsfrom bs4 import BeautifulSoupurl = "http://www.xinfadi.com.cn/marketanalysis/0/list/1.shtml" resp = requests.get(url) f = open ("菜价.csv" , mode="w" , encoding="utf-8" , newline="" ) csv_writer = csv.writer(f) page = BeautifulSoup(resp.text, "html.parser" ) attrs = { "class" : "hq_table" } table = page.find("table" , attrs=attrs) trs = table.find_all("tr" )[1 :] for tr in trs: tds = tr.find_all("td" ) name = tds[0 ].text low = tds[1 ].text avg = tds[2 ].text high = tds[3 ].text spec = tds[4 ].text unit = tds[5 ].text day = tds[6 ].text print(name, low, avg, high, spec, unit, day) csv_writer.writerow([name, low, avg, high, spec, unit, day]) f.close()

这里通过URL可以发现

规避Python关键字属性的方法

1 2 table = page.find("table" , class_="hq_table" ) table = page.find("table" , attrs={"class" : "hq_table" })

优美图库

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 import requestsfrom bs4 import BeautifulSoupurl = "https://www.umei.cc/bizhitupian/weimeibizhi/" resp = requests.get(url=url) resp.encoding = "utf-8" main_page = BeautifulSoup(resp.text, "html.parser" ) a_list = main_page.find("div" , class_="TypeList" ).find_all("a" ) for a in a_list: href = url + a.get("href" ).split("/" )[-1 ] child_page_resp = requests.get(href) child_page_resp.encoding = "utf-8" child_page = BeautifulSoup(child_page_resp.text, "html.parser" ) p = child_page.find("p" , align="center" ) img = p.find("img" ) src = img.get("src" ) img_resp = requests.get(src) img_name = src.split("/" )[-1 ] with open ("source/" + img_name, mode="wb" ) as f: f.write(img_resp.content) print(img_name + " has been downloaded!" )

Xpath 基本使用 XPath是一门在 XML 文档中查找信息的语言。XPath可⽤来在 XML文档中对元素和属性进行遍历,而我们熟知的HTML恰巧属于XML的⼀个⼦集,所以完全可以用Xpath去查找html中的内容。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 from lxml import etreexml = """ <book> <id>1</id> <name>野花遍地⾹</name> <price>1.23</price> <nick>臭⾖腐</nick> <author> <nick id="10086">周⼤强</nick> <nick id="10010">周芷若</nick> <nick class="joy">周杰伦</nick> <nick class="jolin">蔡依林</nick> <div> <nick>惹了</nick> </div> </author> <partner> <nick id="ppc">胖胖陈</nick> <nick id="ppbc">胖胖不陈</nick> </partner> </book> """ tree = etree.XML(xml) result = tree.xpath("/book" ) result = tree.xpath("/book/name" ) result = tree.xpath("/book/name/text()" ) result = tree.xpath("/book/author/nick" ) result = tree.xpath("/book/author/nick/text()" ) result = tree.xpath("/book/author/div/nick/text()" ) result = tree.xpath("/book/author//nick/text()" ) result = tree.xpath("/book/author/*/nick/text()" ) print(result)

1 2 3 4 5 6 7 8 9 10 11 12 13 from lxml import etreetree = etree.parse("my_html.html" ) result = tree.xpath("/html/body/ul/li/a/text()" ) result = tree.xpath("/html/body/ul/li[1]/a/text()" ) result = tree.xpath("/html/body/ol/li/a[@href='dapao']/text()" ) result_list = tree.xpath("/html/body/ol/li" ) for result in result_list: r1 = result.xpath("./a/text()" ) print(r1) r2 = result.xpath("./a/@href" ) print(r2)

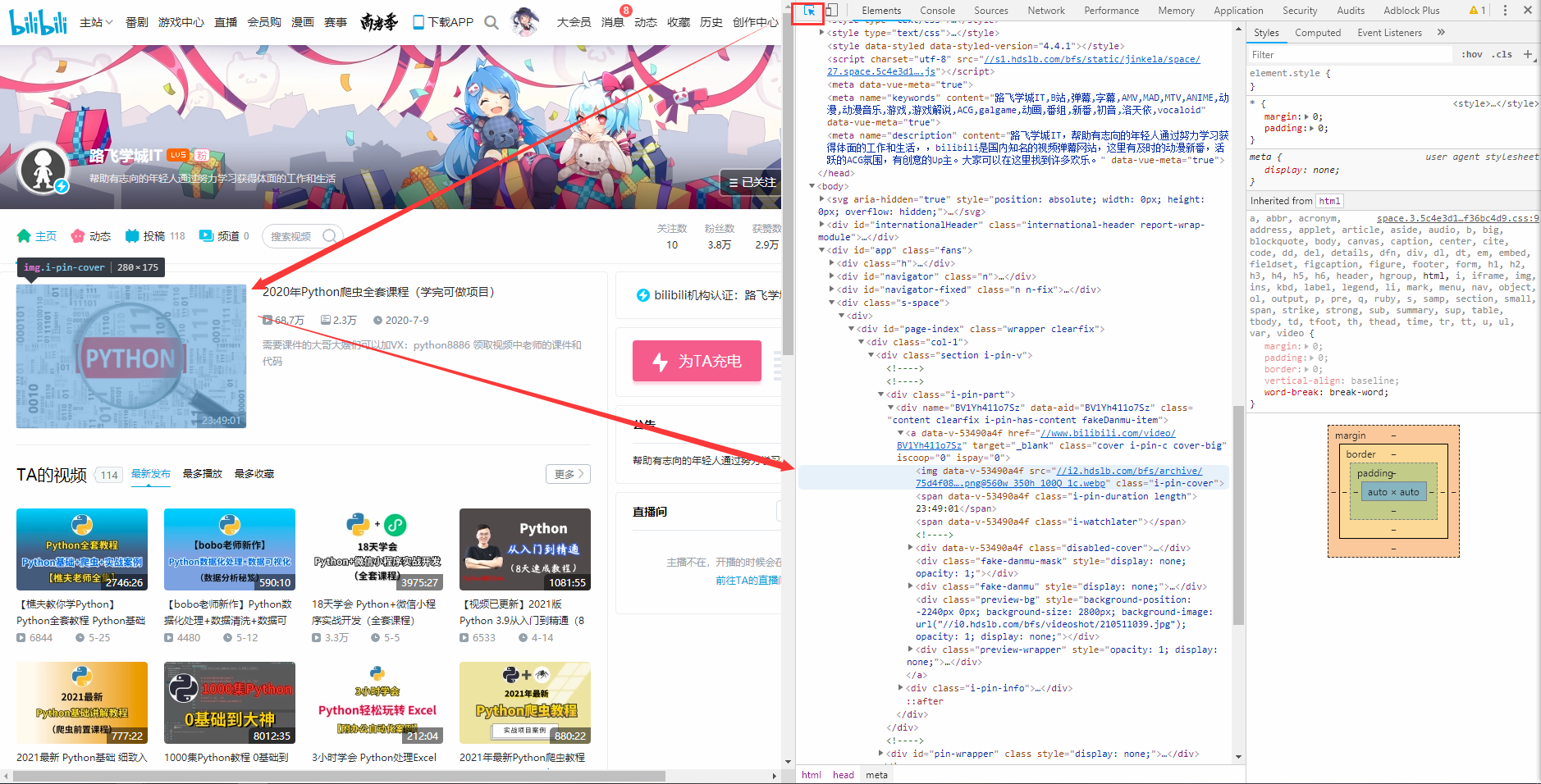

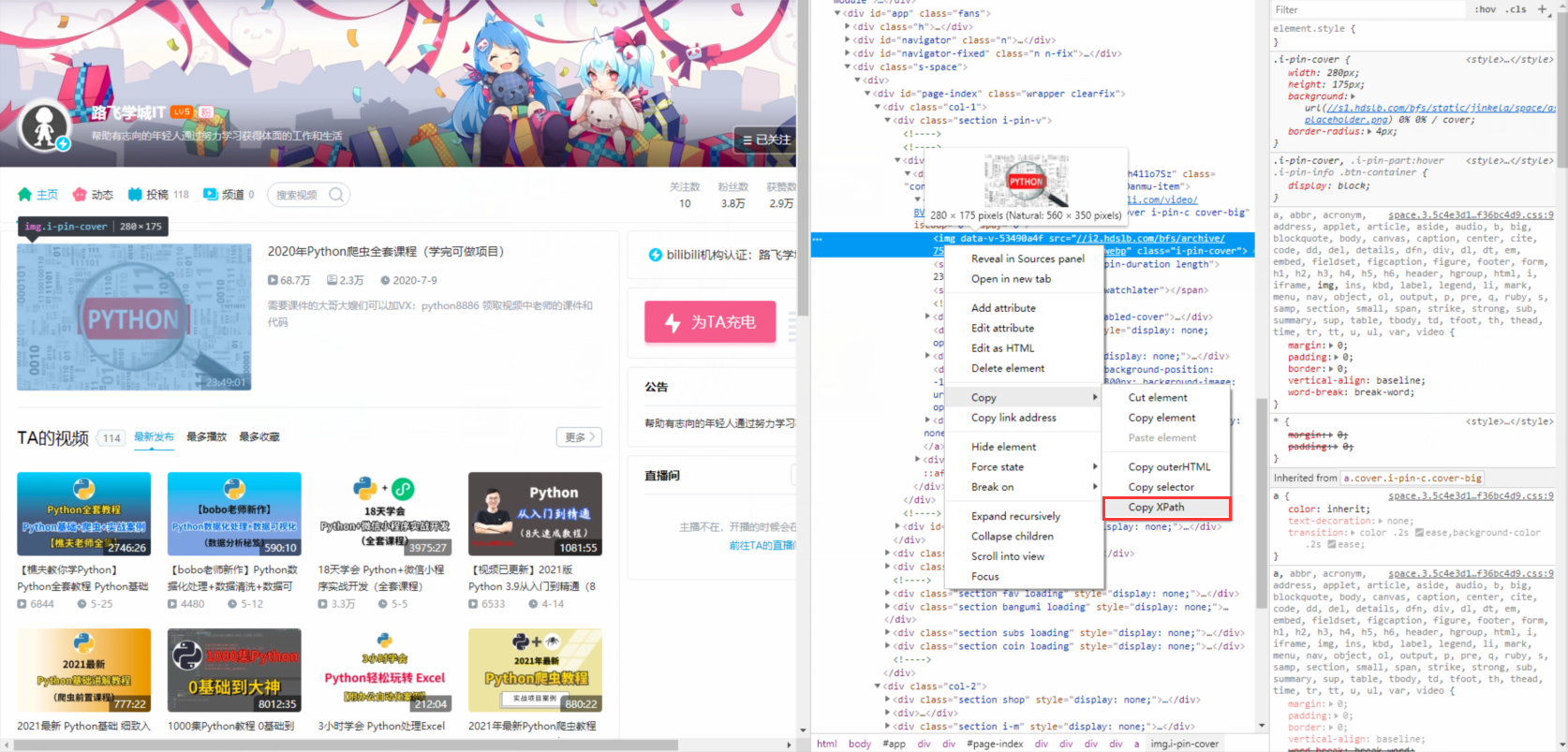

开发者工具使用技巧

实战案例 猪八戒网 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 import requestsfrom lxml import etreeimport csvf = open ("猪八戒.csv" , mode="w" , encoding="utf-8" , newline="" ) csv_writer = csv.writer(f) url = "https://beijing.zbj.com/search/f/?type=new&kw=saas" resp = requests.get(url=url) html = etree.HTML(resp.text) divs = html.xpath("/html/body/div[6]/div/div/div[2]/div[5]/div[1]/div" ) for div in divs: price = div.xpath('./div/div/a[1]/div[2]/div[1]/span[1]/text()' )[0 ].strip("¥" ) title = "SAAS" .join(div.xpath('./div/div/a[1]/div[2]/div[2]/p/text()' )) company_name = div.xpath('./div/div/a[2]/div[1]/p/text()' )[0 ] location = div.xpath("./div/div/a[2]/div[1]/div/span/text()" )[0 ] csv_writer.writerow([price, title, company_name, location]) print([price, title, company_name, location])